To this end, the RECLAIM consortium organised an open training workshop last month on trustworthy Artificial Intelligence (AI) and Circular Economy. The objective behind the workshop was to present the latest developments in AI, Robotics, and socially sensitive gaming, and how these can support circular economy and Trustworthy AI.

The training program invited specialists in robotics and AI. These sessions are open to the public and aim to inform non-experts in AI, robotics and data management about the possibilities offered by new technologies, which are secured and framed by trustworthy and ethical ΑΙ.

As part of the training, the specialists covered a wide range of topics in the field of AI, and robotics highlighting their benefits in transitioning towards a Circular Economy. Topics included AI-driven robotic manipulation, evaluation ethics in AI, and exploring intuitive interfaces in robotics for sustainability.

The first session on AI-driven robotic manipulation explored the benefits of AI integration into robotic systems to create more intelligent and autonomous agents, especially in healthcare industry. For example, AI can already offer expert advice based on patients’ historical data. This data is then stored in intelligent virtual machines for easy access by healthcare professionals.

The difference between robotics and AI is that the former uses hardware and programming to conduct semi or fully autonomous tasks, while the latter is the area of computer science and software, which is leveraged to construct models to solve problems and assist humans. The field of AI includes machine learning and deep learning.

Evaluation of ethics: Can we put a number on ethics?

The second session on ethical AI delved into the question that is perhaps on all our minds: Can we put a number on ethics?

Laurynas Adomoaitis from CEA/LARSIM summed up just how big AI is: “We know AI is a big deal, the market capital of NVIDIA has surpassed that of Germany.” For context, NVIDIA is one of the world’s largest technology companies that surpassed the $2 trillion mark in 2024, next to giants like Apple, Saudi’s ARAMCO, and Microsoft. It specialises in chip manufacturing, artificial intelligence (AI) and computing.

Given the rise of AI – catapulted to fame by slick and ubiquitous Generative AI platforms like ChatGPT operated by OpenAI – one can’t help but ask how can we evaluate the ethics of AI? And how can we measure ethics? While AI’s advancement could potentially boost innovation and productivity, it carries risks like misinformation, bias, discrimination. As the world bets on AI as the next big thing, potential risks abound.

AI-augmented personalised roles are a case in point, said Laurynas, which replicates human faces and presents an avatar view. Despite being synthetic, these avatars evoke emotions and empathy that influences us. Same is the case with advancements in language. This, Laurynas asserted, can lead to potential nudging and manipulation.

In relation to this, when humans project trust onto machines and form emotional connections, it invariably creates an asymmetric relationship as machines cannot reciprocate. Other harms include bias, discrimination, and information hazards, where personal data can be extracted by engineers.

To address these risks, the EU has put in place a regulation called the AI-Act, which is classified based on risk, such as unacceptable risk, high risk, limited risk and minimal risk, all of which have varied requirements. As per this regulation, a significant chunk of present AI systems falls under the minimal risk category.

Although an ethical approach should be implemented, the question is how?

Laurynas said that the evaluation of ethics should be factored in the design phase itself, citing Recital 27 of the EU’s AI Act.

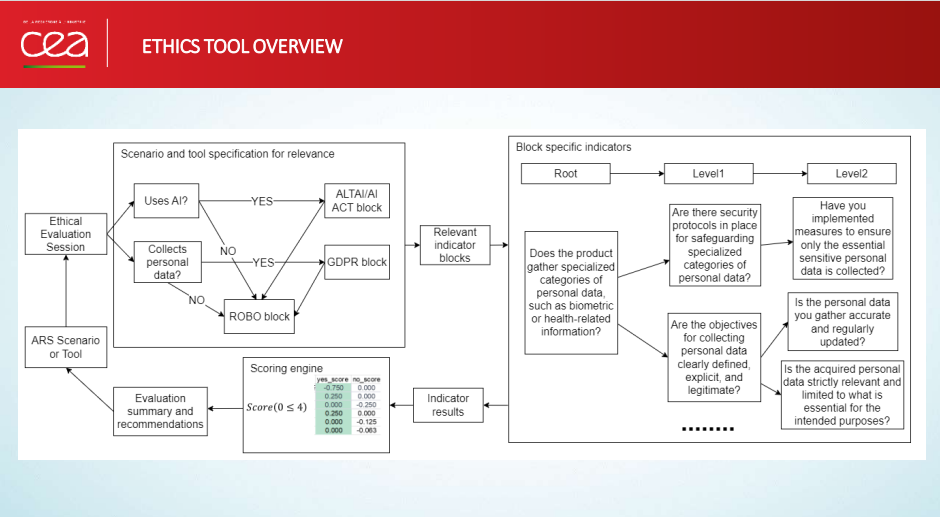

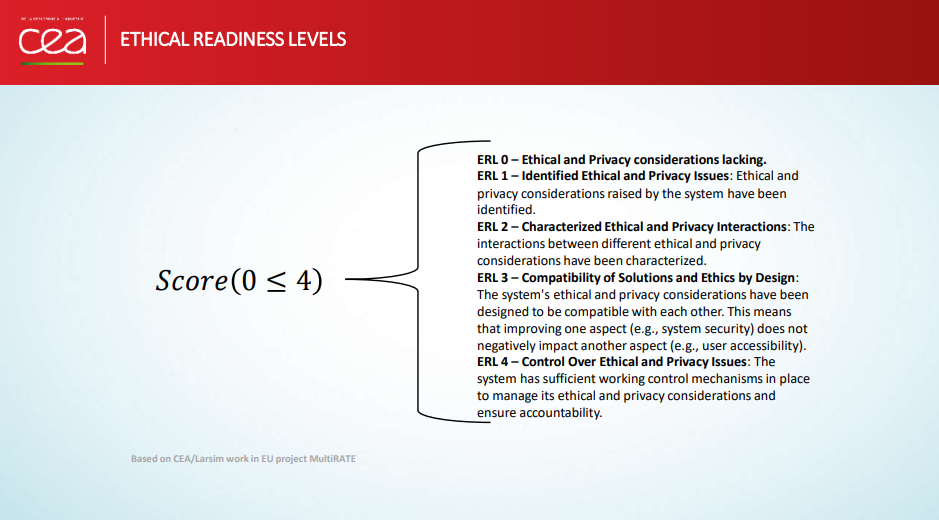

To integrate ethical design in technology development, an evaluation session between a technology developer and an ethics expert is essential. They will be able to identify key ethical indicators and assess, for example, if the robot uses AI and collects personal data, ensuring GDPR compliance.

Discussions between the experts are crucial to build an understanding of the project details and making informed decisions. Ethical scoring is validated and adapted based on feedback from other European projects. The goal isn’t the score itself, Laurynas added, but the insights gained and the resulting design improvements, which are reassessed over development cycles to track progress over time.

- Things to Remember:

- Scores are relative to the application and risk environment.

- Track ethical progress over time, not just scores.

- Always involve both ethics and tech experts in evaluations.

- Effective evaluations lead to meaningful design changes.

How is scoring conducted?

First, validation with other EU projects is an important element in scoring, ensuring the questionnaire makes sense and adapting it through many iterations. The key is the insights gained when two experts discuss the findings. Recommendations are made, implemented, and reassessed in the design cycle, allowing progress to be tracked over time.

This approach enables you to demonstrate meaningful ethical progress to entities like the EU, showing how scores have improved, and ethics maturity has doubled over the years.

Looking forward, one of the biggest challenges is how to interpret the EU’s AI Act operationally and how to implement the regulation technically.

Bridging Technology and Sustainability by Sharath Chandra

The workshop titled “Bridging Technology and Sustainability: Intuitive Interfaces in Robotics” was conducted by Sharath Chandra.

Robots can be instrumental in mitigating climate change and revolutionise how we approach sustainability challenges making them valuable tools in addressing these issues.

This was the overarching message that set the tone of the presentation.

In the backdrop of an aging workforce, Europe is already employing robots as assistive tools, not to replace the workforce but to play a supportive role, emphasised Sharath.

This human-robot interaction takes three forms: coexistence (working parallel), cooperation (synchronised actions between robots and humans), and collaboration (working together on the same task).

Main challenges in Human-Robot Interaction

One of the main challenges is creating an easy-to-use interface. The interaction must be intuitive enough for people with process expertise, not just programming experts. Safety is paramount, and the system should be intelligent enough to handle multiple tasks.

One practical solution, according to Sharath, involves using a tablet PC to interact with the robot. For example, for screwing operations, the robot, equipped with lights and sensors, can be guided by a 3D scan. Humans can indicate where the screws should go by touching the robot and recording the positions on the tablet. This allows for fast configuration of the robot.

Alongside useability, adaptive robot speed is crucial for safety, adjusting based on the distance between the robot and the human user.

Applications for sustainability: EV Batteries

- Electric vehicle (EV) batteries typically require high capacity, posing several challenges:

- Productivity: Difficult processes to automate, leading to reliance on manual labour.

- Dangerous Jobs: Disassembling EV batteries is a dangerous task, taking up to 8 hours of manual labour.

- Capacity: There is a shortage of trained personnel.

- Information Barrier: Procedures are often not available for products and components.

- There are other challenges involved with EV batteries, such as the ones below:

- Understanding LCA: Clear understanding of Life Cycle Assessment (LCA) is crucial.

- Manufacturing: Battery packs are not designed with remanufacturing in mind.

- Digital Product Passport: Standardization is needed.

- Safety: High standards are required for high-voltage battery production.

- Agile Production: Emphasizing human-robot collaboration in low-volume, high-mix production environments.

By addressing these challenges, Sharath said we can improve the interaction between humans and robots, enhancing productivity and safety in various industries, especially EV batteries.

Citizen Science Games and AI by Iro Voulgari

“Gaming is known to improve learning outcomes, conveying messages and raising awareness among players,” said Iro Voulgari in her opening remarks before discussing the value of Citizen Science Games and AI. Games allow players to interact with complex systems and unpack the interplay between different elements.

Most importantly, and here is the punchline, games are particularly effective in changing behaviours, especially in environmental awareness. This is an especially relevant here because the RECLAIM project consists of a Recycling Data Game (RDG) designed and developed by the University of Malta’s Institute of Digital Games that aims to inform, engage and promote curiosity and awareness towards recycling and climate change for behaviour change and to iteratively train AI modules for better waste identification and classification.

Within the gaming realm, there are two models that enable learning and engagement, such as the common information deficit model and the procedural rhetoric model. The first assumes that wrong behaviours stem from a lack of information, it falls short when multiple viewpoints and values are involved. The second, offers players a more active role, enabling them to reach their own conclusions. This interaction fosters emergent dialogue, where meaning arises from community interactions and inspires players to think about their desired world. Players recognise the complexity of problems, the variety of perspectives, and the interconnections between factors.

In RECLAIM project, we recognise the importance of engaging the public to solve scientific problems through citizen science games, involving amateurs or non-professional scientists in producing new knowledge. Volunteers contribute to AI training by collecting or classifying images, helping to train algorithms and verify machine-predicted labels.

The staggering growth of AI and the collaboration of citizens and AI brings opportunities and challenges, as highlighted previously in this post. Some benefits include increased efficiency, accuracy, and resource savings. However, there are concerns about producing high-quality data and ensuring ethical transparency. The RECLAIM game, for instance, faces challenges in designing waste image incentives for data processing. It considers both internal motivations, such as contributing to society and social impact, and external incentives, like rewards and community recognition.

Here, player motivations are crucial, as they value contributing to local or national problems and gaining recognition for their efforts. Community building, communication, achievement, and recognition are significant factors. Incorporating quizzes and mini-facts that are relevant to everyday experiences can enhance engagement and make learning more interesting and impactful. This is also the objective of the RECLAIM Recycling Data Game.